4 AI Agent Patterns You Should Know

Since the company I work for started investing heavily in voice virtual agents, I thought I should learn about the basics and the status quo.

In this article, we are gonna explore some common design patterns for AI agents.

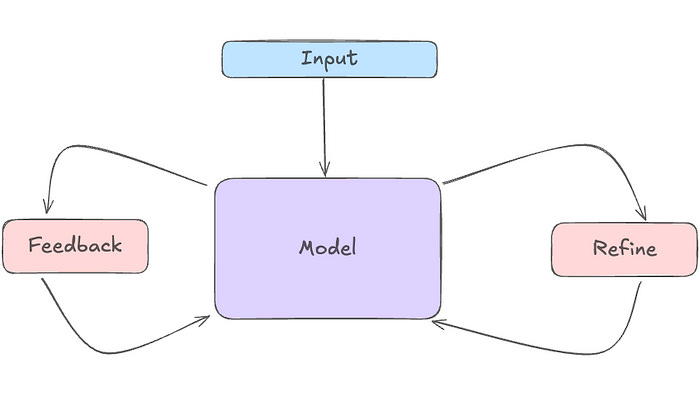

Reflection Pattern

This pattern involves agents analyzing and improving their own performance. Agents use self-evaluation to refine their outputs and decision-making processes.

This is a fairly simple design pattern and can be implemented with a few lines of code.

Example use cases where the reflection design pattern is performing better than just a generic LLM are:

Code Optimizations

Dialogue Response

Take a look at the image below where you see how the LLM is refining its output for a dialogue and for the code optimization example.

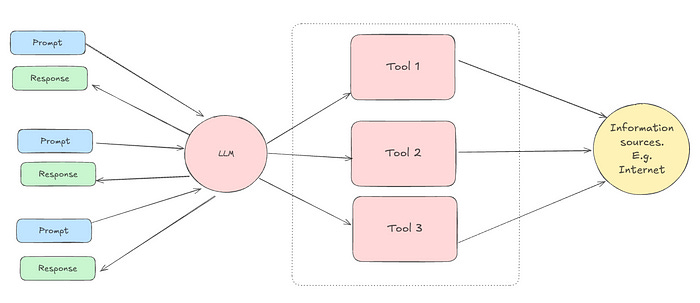

Tool Use Pattern

Here is how the pattern works:

Function Description: The LLM is provided with detailed descriptions of the available tools, including their purposes and required parameters.

Tool Selection: Based on the task at hand, the LLM decides which tool to use.

Function Calling: The LLM generates a special string to call the selected tool, typically in a specific format (e.g., JSON).

Execution: A post-processing step identifies these function calls, executes them, and returns the results to the LLM.

Integration: The LLM incorporates the tool’s output into its final responses.

If you have not been living under a rock and have used GPT, then you realize that it is doing this already automatically for certain tasks.

However, more advanced implementations are when LLMs are given access to hundreds of tools to expand their capabilities.

For example, Gorilla enables LLMs to use tools by invoking APIs. Given a natural language query, Gorilla comes up with the semantically and syntactically correct API to invoke.

With Gorilla, you can invoke 1,600+ (and growing) ML API calls.

Their CLI allows you to perform tasks with natural language. For example, you can

$ gorilla generate 100 random characters into a file called test.txtAnd then it comes up with the CLI command:

cat /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w 100 | head -n 1 > test.txt❗❗Short break

I’m thrilled to share two valuable resources with you!

First, as I turned 30 recently, I took a moment to reflect on everything I’ve learned about life. I compiled those insights into a Notion document called ”Everything I Learned About Life.” It’s full with lessons that can help you navigate your own journey, everyday tools for maximum productivity, and content I consume to grow.

Second, I’ve made Medium Insights accessible to everyone. This powerful analytics tool lets you discover the statistics for any author on Medium, so you can learn from successful writers.

You will find both resource here.

Planning Pattern

Here is how the pattern works:

Task Decomposition: A LLM acts as a controller that breaks down a task into smaller, manageable subtasks.

For each subtask, a specific model is chosen to perform the task.

Specific export models execute the subtasks, and the result is given to the controller LLM, which generates a response.

One example where a planning pattern is used is HuggingGPT. HuggingGPT connects LLMs with the extensive ecosystem of models available on the Hugging Face platform.

Multi-Agent Collaboration

Here is how it works:

Specialized Agents: Each agent is designed to perform specific roles or tasks, such as a software engineer, a product manager, or a designer.

Task Decomposition: Complex tasks are broken down into smaller, manageable subtasks that can be distributed among the agents.

Communication and Coordination: Agents interact with each other, exchanging information and coordinating their actions to achieve common goals.

Distributed Problem Solving: The system uses the collective capabilities of multiple agents to address problems too complex for a single agent.

I don’t think there are a ton of real-world multi-agent systems out there yet since the technology is too immature. An example I found is ChatDev, which is a virtual software company with a CEO, CTO, engineers, etc.

Andrew Ng classifies the Planning and the Multi-Agent Collaboration pattern as unpredictable:

Like the design pattern of Planning, I find the output quality of multi-agent collaboration hard to predict, especially when allowing agents to interact freely and providing them with multiple tools. The more mature patterns of Reflection and Tool Use are more reliable.

Andrew Ng

So, given his insights, I think the first two patterns are more likely to be used in current production systems.

There are even more! Cheers 😇