Our Last Kubernetes Ingress Production Incident — Explained in 5 Minutes

This one setting in NGINX caused unexpected API failures — here’s why.

One of the key ways I continue learning and growing as an engineer is by investigating critical production incidents that could cost our customers millions of $.

Recently, our Kubernetes traffic started hitting dead ends during a deployment. The issue traced back to our Ingress controller’s configuration flags.

The enable-serial-reloads option in NGINX Ingress, designed to prevent resource exhaustion by limiting worker process spawning, contains a subtle bug that blocks dynamic endpoint updates during configuration reloads. This seemingly harmless flag creates a critical window where traffic routing falls out of sync with the actual deployment state, potentially directing requests to non-existent pods.

Taking the time to explore such issues and their solutions — even when I’m not directly involved — helps me expand my knowledge beyond daily tasks. So, let’s dive in!

Managing Traffic in Our Kubernetes Cluster

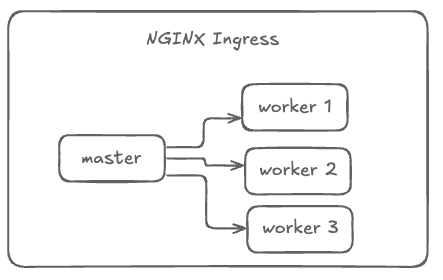

We have a standard setup with an Ingress controller managing incoming traffic to our Kubernetes cluster. We use NGINX for this purpose. The primary functions of an Ingress controller include:

Acting as a reverse proxy and load balancer for HTTP traffic entering the Kubernetes cluster.

Routing external traffic to the appropriate services within the cluster.

Providing features such as SSL termination, URL rewriting, and rate limiting.

The NGINX Ingress controller follows a master-worker architecture to manage configuration updates.

Master vs. Worker Processes in NGINX Explained

NGINX operates on a master-worker architecture, where the master process oversees configuration management and process control, while worker processes handle client connections and request processing.

NGINX Master Process:

Reads and validates configuration.

Manages worker processes.

Handles signals and process control.

NGINX Worker Processes:

Handle incoming client connections and process requests.

Run in parallel to utilize multiple CPU cores.

Serve content to clients efficiently.

When configuration changes occur, NGINX spawns new worker processes with the updated configuration while gracefully shutting down old workers to ensure seamless operation.

How Dynamic Configuration Changes Work

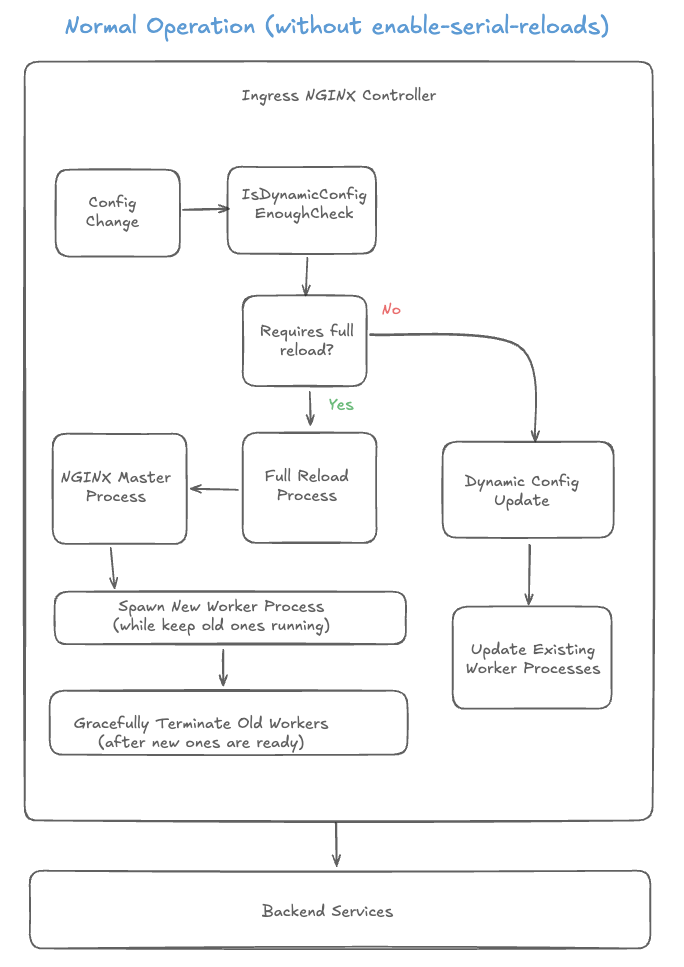

NGINX handles configuration changes in two ways: Full Reload and Dynamic Configuration. The approach depends on the type of change being applied.

Full Reload: A full reload is required for major configuration changes. In this process:

The master process reads the updated configuration.

New worker processes are spawned with the new configuration.

Old worker processes are gracefully terminated to avoid service disruption.

Dynamic Configuration: For certain changes, such as backend endpoint updates, NGINX can apply configurations dynamically without requiring a full reload. In this case:

Changes are applied directly to existing worker processes.

No new worker processes need to be spawned.

The process is faster and more resource-efficient, minimizing service interruptions.

By using dynamic configuration whenever possible, NGINX optimizes performance and reduces unnecessary process restarts.

The Problem: Deployment Rollouts Blocked by Serial Reloads

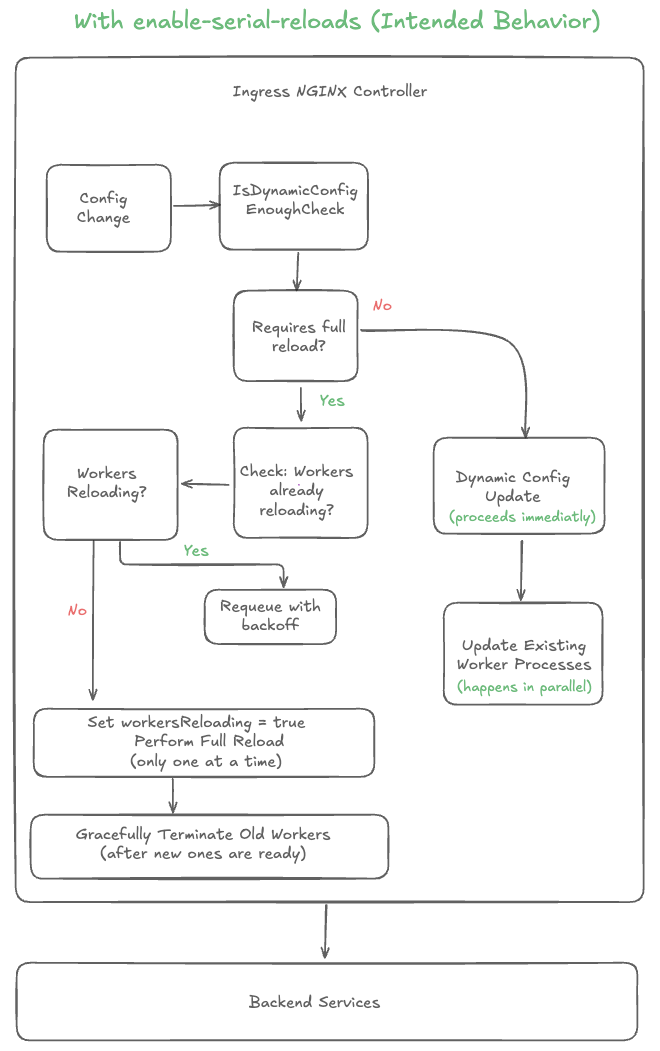

At Cresta, we had the enable-serial-reloads option enabled. This setting prevents too many NGINX worker processes from spawning simultaneously when multiple configuration changes occur in quick succession.

However, enabling this flag introduced an unintended side effect: it blocks dynamic configuration changes, such as backend endpoint updates, that would normally not require a full NGINX reload.

This becomes problematic when:

A long-running NGINX reload is in progress.

During this time, endpoint changes occur (e.g., during a deployment rollout).

These endpoint updates are not applied until the initial reload is fully completed.

Impact:

Some API requests timed out due to delayed configuration updates.

The system experienced unnecessary latency and disruptions during deployments.

For more details, refer to the GitHub issue in kubernetes/ingress-nginx that was opened as a result of this.

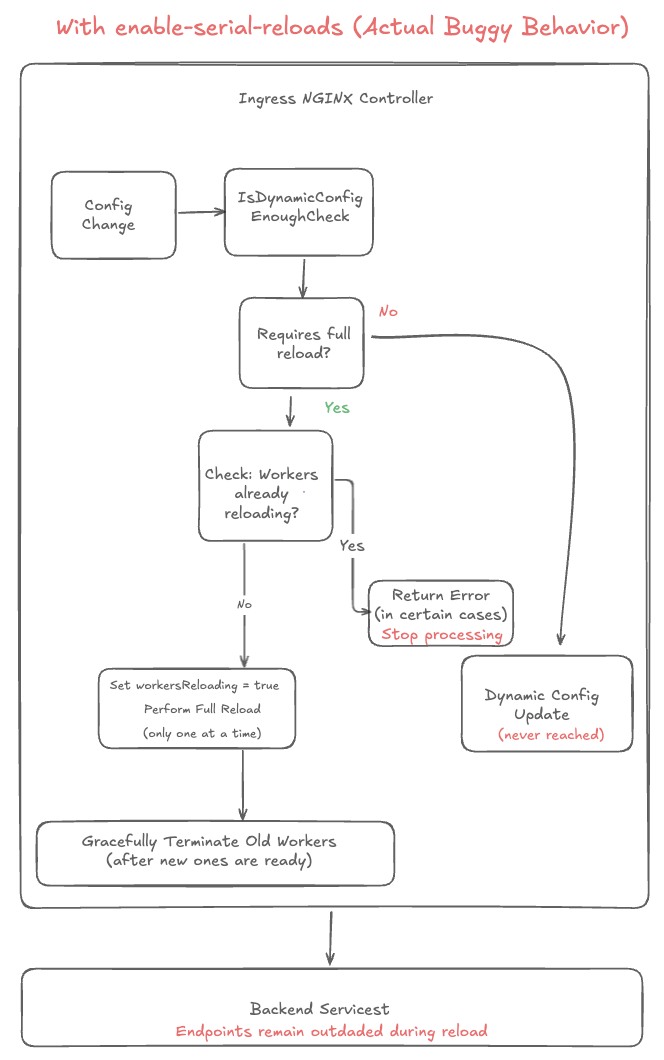

This issue can be better understood by comparing three different scenarios:

Normal Operation (without

enable-serial-reloads) – How NGINX typically handles full reloads and dynamic updates in parallel.With

enable-serial-reloads(Intended Behavior) – The expected outcome when this option is enabled to prevent excessive worker spawning.Buggy Behavior with

enable-serial-reloads– The unintended side effect where dynamic updates get blocked during long-running reloads.

Below, I break down each case to illustrate how configuration changes are processed under different conditions.

1.) Normal Operation (without enable-serial-reloads)

Parallel Processing: Full reloads and dynamic updates happen independently without blocking each other.

Multiple Workers: New worker processes are spawned for each full reload while old ones continue running.

No Serialization: Multiple full reloads can happen simultaneously, potentially creating many worker processes.

Resource Intensive: This approach provides immediate updates but can lead to high memory usage during multiple concurrent reloads.

2.) With enable-serial-reloads (Intended Behavior)

Serialized Full Reloads: Only one full reload can happen at a time, preventing worker process explosion.

Independent Dynamic Updates: Endpoint changes are applied immediately without waiting for full reloads.

Resource Efficient: Limits the number of worker processes to prevent high memory usage and CPU load.

Balanced Approach: Provides resource efficiency while maintaining responsiveness for endpoint changes.

3.) Buggy behavior with enable-serial-reloads

Early Error Return: When workers are reloading, the controller returns an error that stops all processing.

Blocked Dynamic Updates: Endpoint changes are not applied until the current reload completes.

Outdated Endpoints: During deployment rollouts, traffic may be sent to outdated or terminated pods.

Delayed Service Discovery: The bug defeats the purpose of dynamic configuration for quick endpoint updates.

Growth Happens by Exploring the Unknown

Despite having a heavy workload that day, I decided to take some time to explore the bug in more depth.

As I was reading through the Slack messages related to the issue, one of the 10x engineers at Cresta wrote:

“I’m restarting Ingress,” attempting to resolve the problem.

Then, I imagined a scenario:

“What if I were the only person online right now and had to restart NGINX Ingress? What steps would I need to take?”

Once I had a clear understanding, I sent my findings to the 10x engineer and asked:

“Hey, is this the correct way to restart the NGINX Ingress?”

The entire process took me about an hour, but in that single hour, I learned more than I would have in two weeks of just sticking to my usual tasks. Why? Because I explored a part of the tech stack I was unfamiliar with — something I wouldn’t have encountered otherwise.

So the key takeaway is this:

Take time out from your day-to-day tasks to explore other parts of the system. Especially during a production incident. Ask yourself:

“If I were the only person online right now, could I resolve this issue?”

By adopting this mindset, you’ll continuously expand your technical knowledge and grow as an engineer.

💡 Want More Tools to Help You Grow?

I love sharing tools and insights that help others grow — both as engineers and as humans.

If you’re enjoying this post, here are two things you shouldn’t miss:

📅 Substack Note Scheduler — This script allows you to schedule Substack Notes using a Notion database. Basic coding experience is required to set this up. It is free to use :)

📚 Everything I Learned About Life — A Notion doc where I reflect on lessons learned, practical tools I use daily, and content that keeps me growing.

👉 Find all resources (and more) here: Start Here